Building micro services through Event Driven Architecture part25 : automate workflows to build, test, and deploy code from GitHub using GitHub Actions

Building micro services through Event Driven Architecture part25 : automate workflows to build, test, and deploy code from GitHub using GitHub Actions

This tutorial is the 25th episode of a series : Building microservices through Event Driven Architecture.

GitHub Actions is a continuous integration and continuous delivery (CI/CD) platform that allows you to automate your build, test, and deployment pipeline. You can create workflows that build and test every pull request to your repository, or deploy merged pull requests to production. To learn more about github actions you cn follow this link : https://docs.github.com/en/actions/learn-github-actions/understanding-github-actions

In this tutorial I will use github actions to build and deploy an azure azure kubernetes services cluster , an azure sql database with private endpoint and an azure container registry usind helm charts and terraform.

Setup Github Actions

Deploy infrastructure

To enable kubernetes, I just need to download and install docker for desktop and activate it

Workflow

-

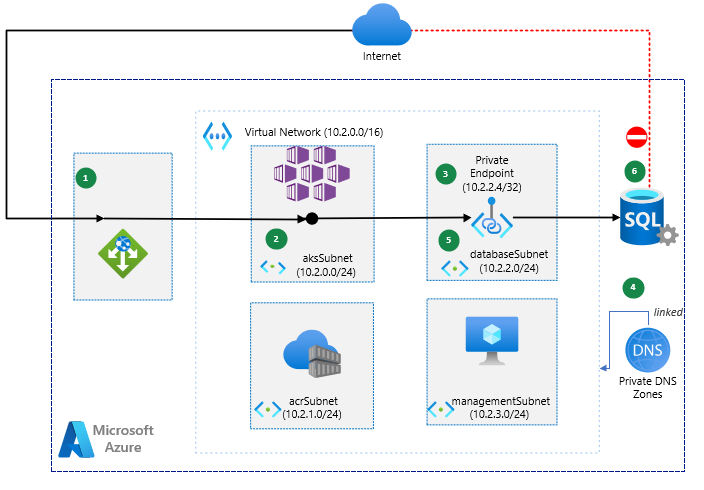

The web application gateway receives an HTTP request from the internet and should requires an API call to the Azure SQL Database.

- The web api connects to the virtual network through a virtual interface mounted in the aksSubnet of the virtual network LogCorner.EduSync.Speech.Vnet

-

Azure Private Link sets up a private endpoint for the Azure SQL Database in the databaseSubnet of the virtual network.

-

The web api sends a query for the IP address of the Azure SQL Database. The query traverses the virtual interface in the aksSubnet. The CNAME of the Azure SQL Database directs the query to the private DNS zone. The private DNS zone returns the private IP address of the private endpoint set up for the Azure SQL Database.

-

The web api connects to the Azure SQL Database through the private endpoint in the databaseSubnet.

-

The Azure SQL Database firewall allows only traffic coming from the databaseSubnet to connect. The database is inaccessible from the public internet.

Components

This scenario uses the following Azure services:

-

Azure Web Application Gateway distribute incoming traffic across the kubernetes cluster ensuring high availability and scalability

- Azure kubernetes services : hosts web api, allowing autoscale and high availability without having to manage infrastructure. for now the ask cluster have a public ip address and I will disable it on upcoming tutorials

- Azure container registry : hosts docker images deployed in the kubernetes cluster. for now the azure container registry have public access and I will disable it on upcoming tutorials

-

Azure SQL Database is a general-purpose relational database managed service that supports relational data, spatial data, JSON, and XML.

-

Azure Virtual Network is the fundamental building block for private networks in Azure. Azure resources like virtual machines (VMs) can securely communicate with each other, the internet, and on-premises networks through Virtual Networks.

-

Azure Private Link provides a private endpoint in a Virtual Network for connectivity to Azure PaaS services like Azure Storage and SQL Database, or to customer or partner services.

-

Azure DNS hosts private DNS zones that provide a reliable, secure DNS service to manage and resolve domain names in a virtual network without the need to add a custom DNS solution.

- Azure virtual machine : used to connect and manage sql server database through the private endpoint

- Azure bastion Host : used to connect securely to the azure virtual machine

The terraform files to deploy the infrastrcuture can be found here : .\LogCorner.EduSync.Speech.Command.github\workflows\deploy-to-aks-helm.yml

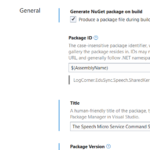

This is a GitHub Actions workflow that defines two environment variables:

ACR_LOGON_SERVER and IMAGE_NAME :

The ACR_LOGON_SERVER variable is set to the login server URL for an Azure Container Registry (ACR).

The IMAGE_NAME variable is set to a Docker image name in the ACR. This name is constructed using the secrets.ACR_NAME secret and the GitHub run_number variable, which is a unique identifier for each workflow run.

This is a GitHub workflow that automates the build and deployment of a Docker container image to Azure Kubernetes Service (AKS) using Terraform and Helm.

The workflow consists of three jobs: “setup-iac“, “build-deploy-image“, and “deploy-to-aks“. The first job sets up the environment variables needed for Terraform to authenticate and access the Azure resources, and it runs Terraform to validate and plan the infrastructure changes.

The second job builds and pushes the Docker image to the Azure Container Registry, and the third job deploys the container image to AKS using Helm.

The workflow is triggered by a push to the repository or manually via the workflow dispatch event.

name: Container Workflow

on:

push:

workflow_dispatch:

env:

ACR_LOGON_SERVER: ${{ secrets.ACR_NAME }}.azurecr.io

IMAGE_NAME: ${{ secrets.ACR_NAME }}.azurecr.io/logcorner-edusync-speech-command:${{ github.run_number }}

jobs:

setup-iac:

env:

ARM_CLIENT_ID: ${{ secrets.SERVICE_PRINCIPAL_ID }}

ARM_CLIENT_SECRET: ${{ secrets.SERVICE_PRINCIPAL_PASSWORD }}

ARM_SUBSCRIPTION_ID: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

ARM_TENANT_ID: ${{ secrets.AZURE_TENANT_ID }}

defaults:

run:

working-directory: ./iac

permissions:

contents: read

id-token: write

runs-on: ubuntu-latest

steps:

# checkout the repo

- name: 'Checkout GitHub Action'

uses: actions/checkout@master

- uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.1.4

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

- name: Terraform Init

id: init

run: terraform init

- name: Terraform Validate

id: validate

run: terraform validate

- name: Terraform Plan

id: plan

run: terraform plan -var-file="dev.tfvars" -input=false -out=tfplan

continue-on-error: false

# Apply Terraform changes

- name: Terraform Apply

id: apply

run: terraform apply -var-file="dev.tfvars" -auto-approve -input=false ${{ steps.plan.outputs.plan_file }}

The previous describes a Terraform pipeline, where I’m defining a job called setup-iac with a few steps to deploy the infrastructure as code. Here’s what each step does:

- Checkout GitHub Action: This step checks out the current repository, so that Terraform can access the configuration files.

- hashicorp/setup-terraform@v2: This action installs and sets up Terraform in the environment.

- Terraform Init: This step initializes Terraform and sets up the backend configuration.

- Terraform Validate: This step checks the syntax and configuration of the Terraform files, to make sure that they are valid.

- Terraform Plan: This step creates an execution plan for Terraform to apply, based on the configuration files.

- Terraform Apply: This step applies the changes defined in the execution plan generated by Terraform, based on the configuration files.

Overall, this job sets up Terraform and then applies the Terraform configuration files to deploy the infrastructure in the target environment, with the specified variable file and auto-approve fl

Build docker images Tag and push image to azure container registry

This section of the workflow defines a job that builds a Docker image and pushes it to a container registry. The job requires the completion of the “setup-iac” job before it can be executed. The job runs on a virtual machine running the Ubuntu operating system.

The steps in the job include checking out the code repository, logging in to a Docker registry using Azure credentials, building a Docker image using a docker-compose file, tagging the built image with the specified image name, and finally pushing the tagged image to the container registry.

build-deploy-image:

permissions:

contents: read

id-token: write

runs-on: ubuntu-latest

needs: setup-iac

steps:

# checkout the repo

- name: 'Checkout GitHub Action'

uses: actions/checkout@master

- name: 'Build and push image'

uses: azure/docker-login@v1

with:

login-server: ${{ env.ACR_LOGON_SERVER }}

username: ${{ secrets.SERVICE_PRINCIPAL_ID }}

password: ${{ secrets.SERVICE_PRINCIPAL_PASSWORD }}

- run: docker-compose -f ./src/docker-compose.yml build

- run: docker tag logcornerhub/logcorner-edusync-speech-command ${{ env.IMAGE_NAME }}

- run: docker push ${{ env.IMAGE_NAME }}

deploy and manage applications on a Kubernetes cluster using helm charts and gitub actions

This GitHub Actions workflow deploys an application to an Azure Kubernetes Service (AKS) cluster using Helm. Here are the details of the deploy-to-aks job:

- Permissions: The job needs permission to read the contents of the repository and write the ID token.

- Runs on: The job runs on an Ubuntu Linux virtual machine hosted by GitHub.

- Needs: The

build-deploy-imagejob must complete successfully before thedeploy-to-aksjob can start. - Steps:

- Checkout the repository using the

actions/checkoutaction. - Log in to Azure using the

azure/loginaction and the service principal credentials stored in GitHub secrets. - Set the AKS cluster context using the

azure/aks-set-contextaction and the AKS cluster name and resource group stored in GitHub secrets. - Install Helm using the

azure/setup-helmaction. - Deploy the application to AKS using the

helm upgradecommand. This command upgrades (or installs, if not already installed) a Helm release and sets the specified configuration values. The command deploys the application in a Kubernetes Deployment and exposes it using a Kubernetes Service of typeLoadBalancerwith port 80. Theimage.repository,image.name, andimage.tagvalues are set to the Azure Container Registry (ACR) login server, the Docker image name, and the GitHub run number, respectively. ThereplicaCountvalue is set to 1. Theenvsection defines theIMAGE_TAGenvironment variable that is used in thehelm upgradecommand.

- Checkout the repository using the

deploy-to-aks:

permissions:

contents: read

id-token: write

runs-on: ubuntu-latest

needs: build-deploy-image

steps:

- uses: actions/checkout@master

# Logs in with your Azure credentials

- name: Azure login

uses: azure/login@v1.4.6

with:

client-id: ${{ secrets.SERVICE_PRINCIPAL_ID }}

tenant-id: ${{ secrets.AZURE_TENANT_ID }}

subscription-id: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

# Set the target Azure Kubernetes Service (AKS) cluster.

- name: Set AKS context

id: set-context

uses: azure/aks-set-context@v3

with:

resource-group: ${{ secrets.RESOURCE_GROUP_NAME }}

cluster-name: ${{ secrets.AKS_NAME }}

- name: Install Helm

uses: azure/setup-helm@v3

with:

version: 'latest' # default is latest (stable)

token: ${{ secrets.GITHUB_TOKEN }} # only needed if version is 'latest'

id: install

- name: Deploy to AKS using Helm

run: |

helm upgrade --install logcorner-command ./kubernetes/aks/helm-chart/webapi\

--set image.repository=${{ secrets.ACR_LOGON_SERVER }} \

--set image.name=logcorner-edusync-speech-command \

--set image.tag=${{ github.run_number }} \

--set replicaCount=1 \

--set service.type=LoadBalancer \

--set service.port=80

env:

IMAGE_TAG: ${{ github.run_number }}

Note the service load balancer public ip (20.23.189.10) I will need it to test the web api : http://52.137.56.112/swagger/index.html

Setup sql server database

To setup the sql server database, I should connect to the bastion visrtual machine deployed on management. Note that I can connect to the database using a virtual machine that can reach the SQL Server using its private endpoint.

Dowload and install a tool to help me for managing and administering SQL Server databases like SQL Server Management Studio (SSMS) that i can found here : https://learn.microsoft.com/en-us/sql/ssms/download-sql-server-management-studio-ssms?view=sql-server-ver16

you can aslo use your another tool : azure data studio, etc…

Connect to the database and run the scripts to create the database schema.

you can find the scripts inside the github repository folder : .\LogCorner.EduSync.Speech.Command\src\LogCorner.EduSync.Speech.Database\dbo\Tables

USE LogCorner.EduSync.Speech.Database

GO

CREATE TABLE [dbo].[EventStore] (

[Id] INT IDENTITY (1, 1) NOT NULL,

[Version] BIGINT NOT NULL,

[AggregateId] UNIQUEIDENTIFIER NOT NULL,

[Name] NVARCHAR (250) NOT NULL,

[TypeName] NVARCHAR (250) NOT NULL,

[OccurredOn] DATETIME NOT NULL,

[PayLoad] TEXT NOT NULL,

CONSTRAINT [PK__EventStore] PRIMARY KEY CLUSTERED ([Id] ASC)

);

GO

PRINT N'Creating Table [dbo].[MediaFile]...';

GO

CREATE TABLE [dbo].[MediaFile] (

[ID] UNIQUEIDENTIFIER NOT NULL,

[Url] NVARCHAR (250) NULL,

[SpeechID] UNIQUEIDENTIFIER NOT NULL,

PRIMARY KEY CLUSTERED ([ID] ASC)

);

GO

PRINT N'Creating Table [dbo].[Speech]...';

GO

CREATE TABLE [dbo].[Speech] (

[ID] UNIQUEIDENTIFIER NOT NULL,

[Title] NVARCHAR (250) NOT NULL,

[Description] NVARCHAR (MAX) NOT NULL,

[Url] NVARCHAR (250) NOT NULL,

[Type] INT NOT NULL,

[IsDeleted] BIT NULL,

CONSTRAINT [PK_Presentation] PRIMARY KEY CLUSTERED ([ID] ASC)

);

GO

PRINT N'Creating Default Constraint unnamed constraint on [dbo].[Speech]...';

GO

ALTER TABLE [dbo].[Speech]

ADD DEFAULT (newid()) FOR [ID];

GO

PRINT N'Creating Default Constraint unnamed constraint on [dbo].[Speech]...';

GO

ALTER TABLE [dbo].[Speech]

ADD DEFAULT ((1)) FOR [Type];

GO

PRINT N'Creating Default Constraint unnamed constraint on [dbo].[Speech]...';

GO

ALTER TABLE [dbo].[Speech]

ADD DEFAULT ((0)) FOR [IsDeleted];

GO

PRINT N'Creating Foreign Key [dbo].[FK_MediaFile_Speech]...';

GO

ALTER TABLE [dbo].[MediaFile]

ADD CONSTRAINT [FK_MediaFile_Speech] FOREIGN KEY ([SpeechID]) REFERENCES [dbo].[Speech] ([ID]);

GO

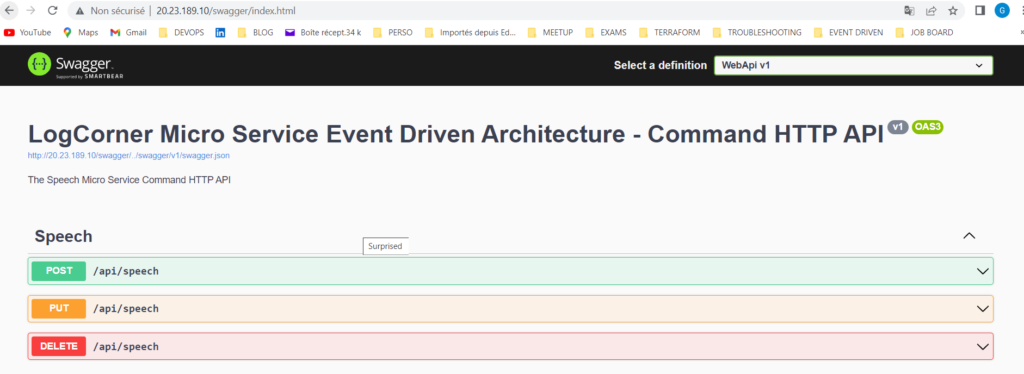

Test api deployment

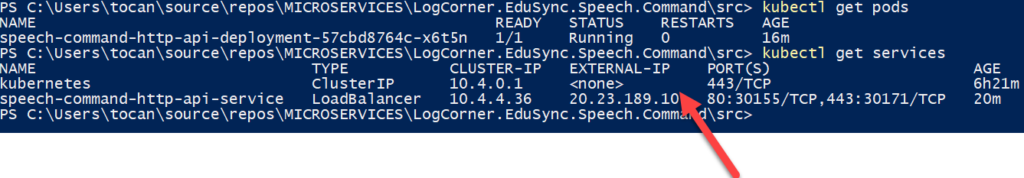

Open a browser, http://20.23.189.10/swagger/index.html where 20.23.189.10 is the public ip address of the service . you can get it by running kubectl get services :

speech-command-http-api-service LoadBalancer 10.4.4.36 20.23.189.10 80:30155/TCP,443:30171/TCP

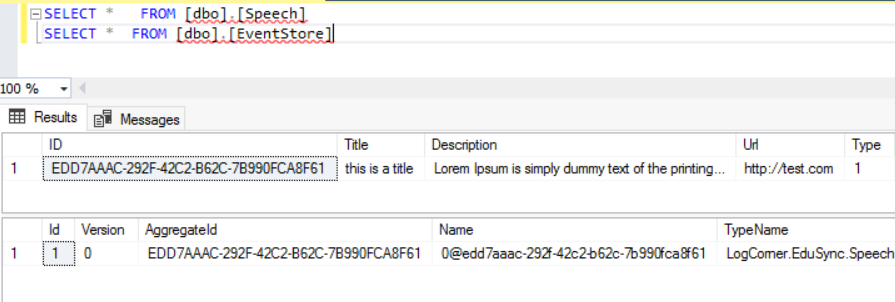

Create a post request (/api/speech), the body of the post request should look like this :

http://localhost:30124/swagger/index.html

{

"title": "this is a title",

"description": "Lorem Ipsum is simply dummy text of the printing and typesetting industry. Lorem Ipsum has been the industry's standard dummy text ever since the 1500s, when an unknown printer took a galley of type and scrambled it to make a type specimen book.",

"url": "http://test.com",

"typeId": 1

}

We should have a new record in the database

Code source is available here :

- https://github.com/logcorner/LogCorner.EduSync.Speech.Command/tree/episode-24-helm

Thanks for reading, if you have any feedback, feel free to post it